Experimental Residency

The past two months I've been working at the LKV Residency in Trondheim, Norway. This page sums up the work that i've been doing. Prior to the residency I decided to continue with my script based on the Platonic allegories of The Cave and the Divided Line and transform those into what Reza Negarestani dubbed as a "reality game in four levels." The initial idea started with thinking of a generative idea of Demiurge, so bottom up iso of top down, but in the first week I noticed this would demand substantial more time than the two months I had for the residency. Since the script for the game is already in place I decided to continue with a more experimental approach of which below you can see the bifurcations of that initial idea.

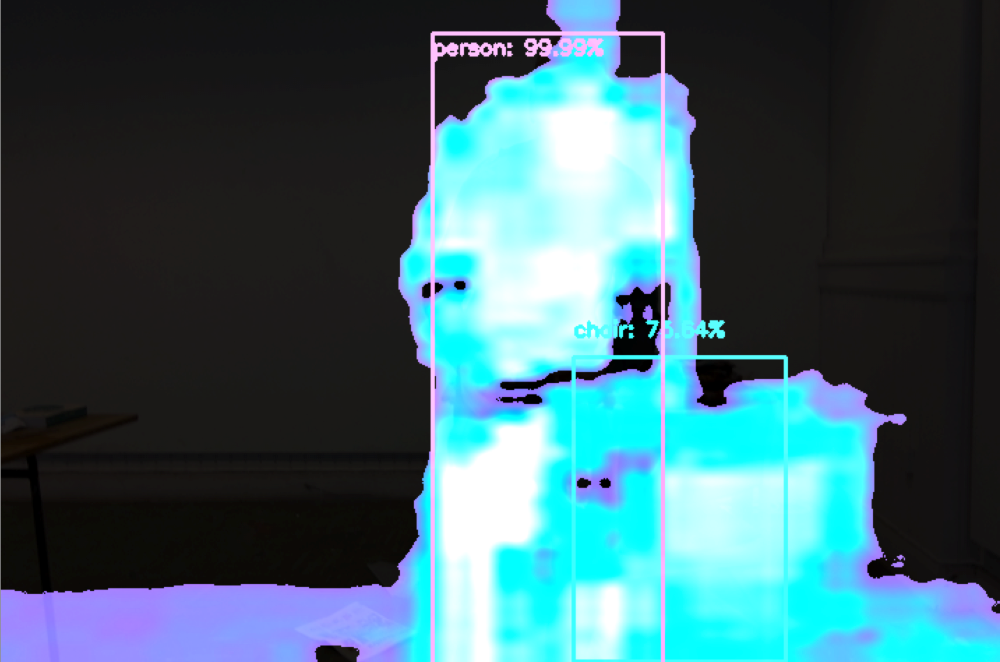

Prototype 1 | Neural Interaction

Keywords:Artificial Synaesthesia, CNN's, Object Detection, Facial feature extraction, Featuremaps, Platonic Shadows, Fourier Transform and Inverse, Python (of course)

This experiment took me two troubling weeks to build into a rudimentary prototype, so i'm very excited and happy to share this with you. The project is somewhat a philosophical and (syn-)aesthetic research into the layers and neurons of an artificial neural network. Without delineating the inner workings of an artificial neural network (ANN), I can tell you that when you understand how these mechanisms function, and what they actually transcribe in their specific tasks i.e. object detection, facial recognition, audio synthesis, etc. you have a chance to grasp the beautiful philosophical metaphors present within these systems, that when exposed, perfectly match existing classical allegories i.e. Plato's Cave and The Divided Line. The aesthetic movement through the neurons of for instance a CNN designed for facial recognition, coincides with how Plato dialogues in the Republic the movement from the visible to the intelligible. The lower level neurons are designed to extract more abstract features, and the more you move up (or down depends on how you look at it) the less abstract the representation becomes. Hence, passing through these layers you notice that at a certain point you pass through the world of shadows of the neural network, or the NN's Cave. This project is a very loose attempt to grasp the aesthetic movement of an ANN's (and the camera, computer, objects and so on of course) attempt to perceive its world. In the video below you clearly hear me triggering different layers of the CNN which effects in distinctly different sounds.

This project will be developed further over the course of 2020 in collaboration with a sound artist and all concomitant code will be released then.

Prototype 2 | Real time Facial Motion Capture

Keywords:3D Modelling, Unreal Engine, Texture mapping, OSC, Python, NI Mate, Facial Landmark detection, KinectV2, Arduino, Wekinator

Some of the ideas I had seemed to converge towards a performing philosophy embedded in a technological body sort to say: logical procedure, syntactical jitters, machine (un)learnings, etc, etc. But a modular Cartesian body, disconnected perse, only a head (thinker/philosopher) and a hand (maker/artist). To connect these I wanted to build a cheap - as market solutions only go for exorbitant price ranges - real time facial motion capture setup, as well as a hand gesture controller, also cheap...